NexusSplats: Efficient 3D Gaussian Splatting in the Wild

NexusSplats: Efficient 3D Gaussian Splatting in the Wild

NexusSplats enables efficient and fine-grained 3D scene reconstruction under complex lighting and occlusion conditions.

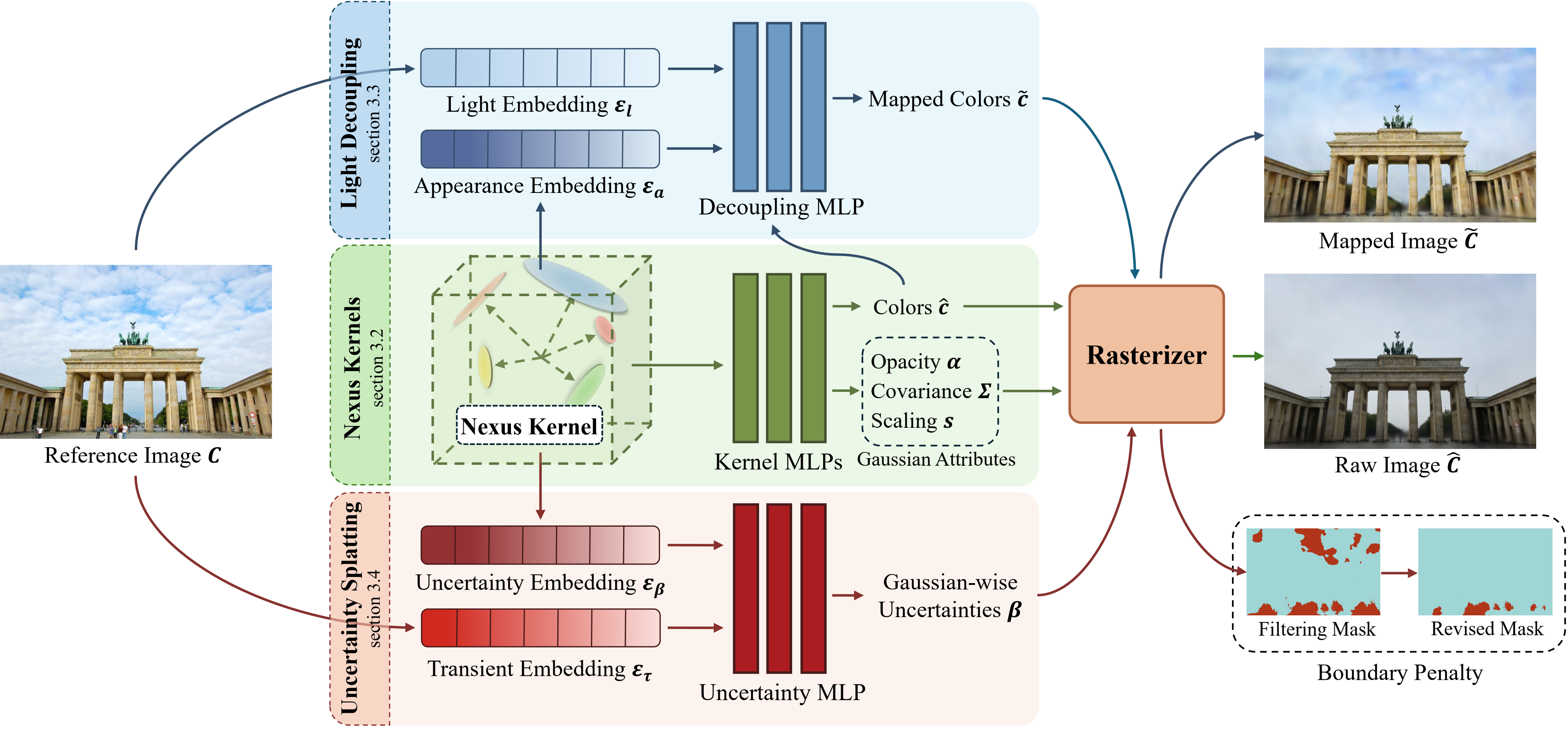

Photorealistic 3D reconstruction of unstructured real-world scenes remains challenging due to complex illumination variations and transient occlusions. Existing methods based on Neural Radiance Fields (NeRF) and 3D Gaussian Splatting (3DGS) struggle with inefficient light decoupling and structure-agnostic occlusion handling. To address these limitations, we propose NexusSplats, an approach tailored for efficient and high-fidelity 3D scene reconstruction under complex lighting and occlusion conditions. In particular, NexusSplats leverages a hierarchical light decoupling strategy that performs centralized appearance learning, efficiently and effectively decoupling varying lighting conditions. Furthermore, a structure-aware occlusion handling mechanism is developed, establishing a nexus between 3D and 2D structures for fine-grained occlusion handling. Experimental results demonstrate that NexusSplats achieves state-of-the-art rendering quality and reduces the number of total parameters by 65.4%, leading to 2.7× faster reconstruction.

We propose Hierarchical Light Decoupling that leverages nexus kernels to hierarchically manage 3D Gaussians and perform centralized appearance learning for efficient and effective light condition decoupling. This design effectively reduces parameter redundancy while achieving superior texture fidelity under varying illumination.

There are several concurrent works that also aim to extend 3DGS to handle in-the-wild data:

We sincerely appreciate the authors of 3DGS and NerfBaselines for their great work and released code. Please follow their licenses when using our code.

@article{tang2024nexussplats,

title={NexusSplats: Efficient 3D Gaussian Splatting in the Wild},

author={Tang, Yuzhou and Xu, Dejun and Hou, Yongjie and Wang, Zhenzhong and Jiang, Min},

journal={arXiv preprint arXiv:2411.14514},

year={2024}

}